Standing Out at CES with Spatial Computing

- 3D Animation

- Augmented Reality

- Development

(01)The Challenge

Engage the trade show audience with a unique experience

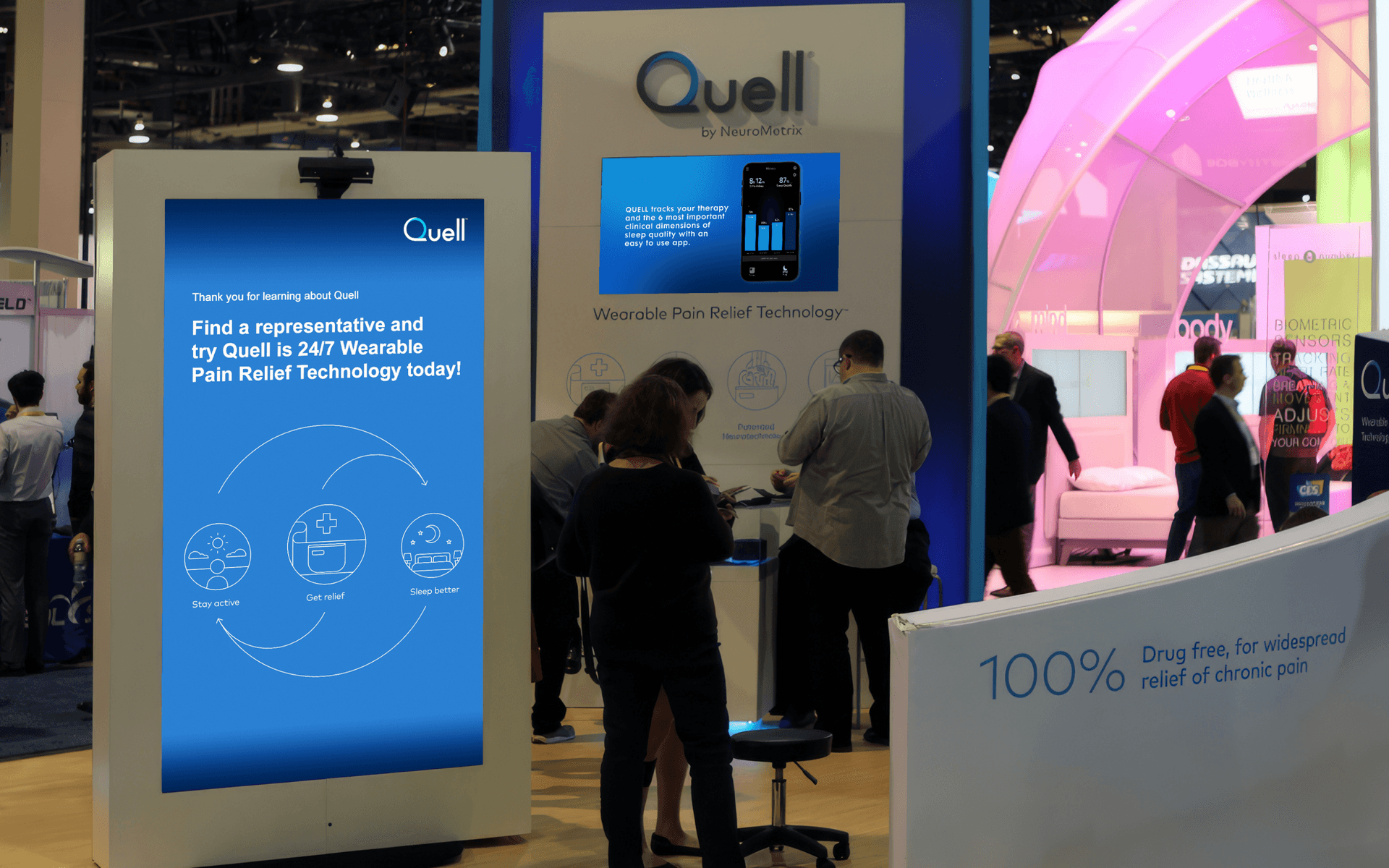

At a top-tier event like the Consumer Electronics Show in Las Vegas (CES), it is difficult to stand out among the crowd. NeuroMetrix was launching a new, wearable technology for chronic pain relief, Quell. To introduce this product, they wanted to create an unforgettable experience that would capture the audience’s attention and encourage them to interact with the brand ambassadors.

(02)The Solution

Real time motion capture grabbed their attention

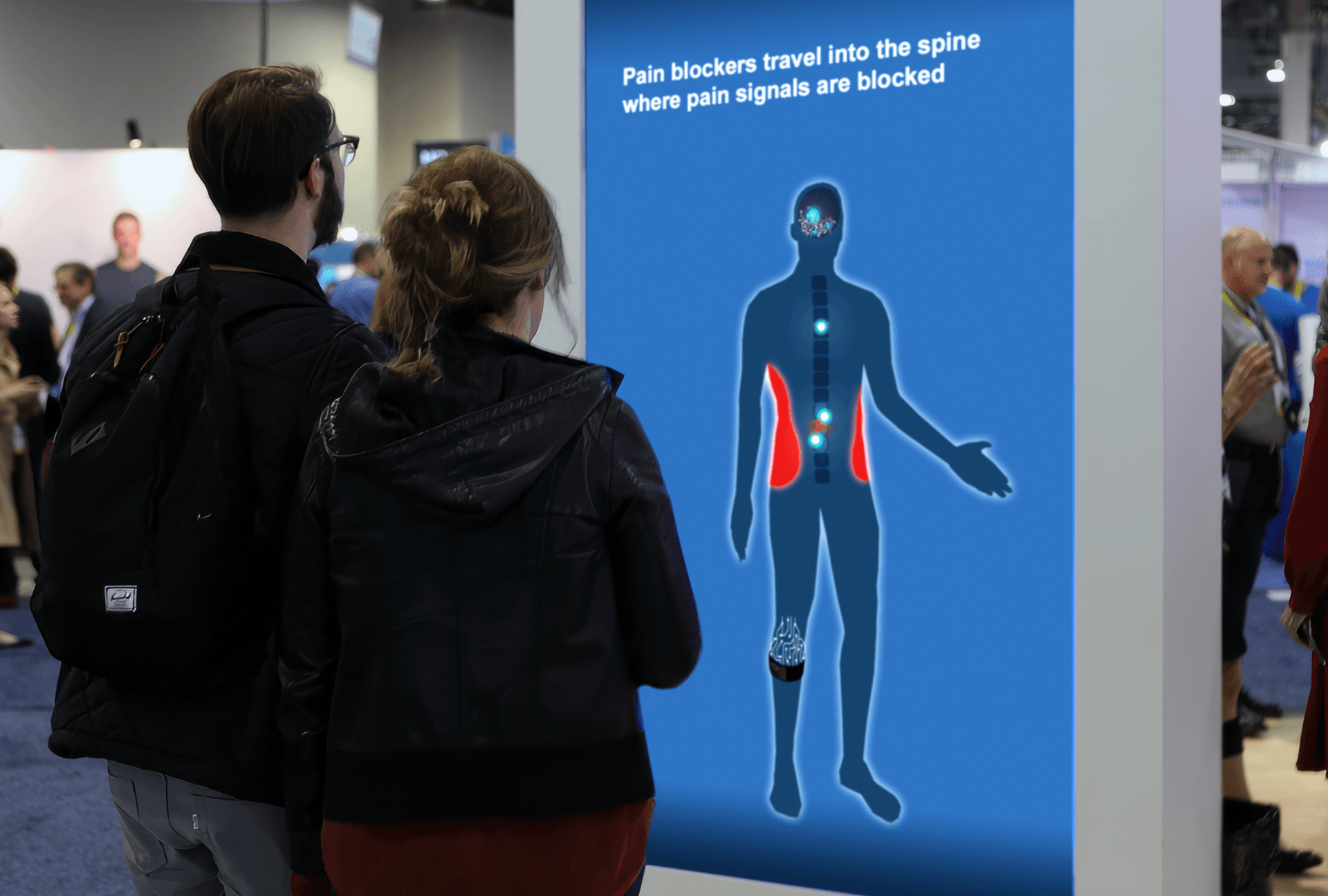

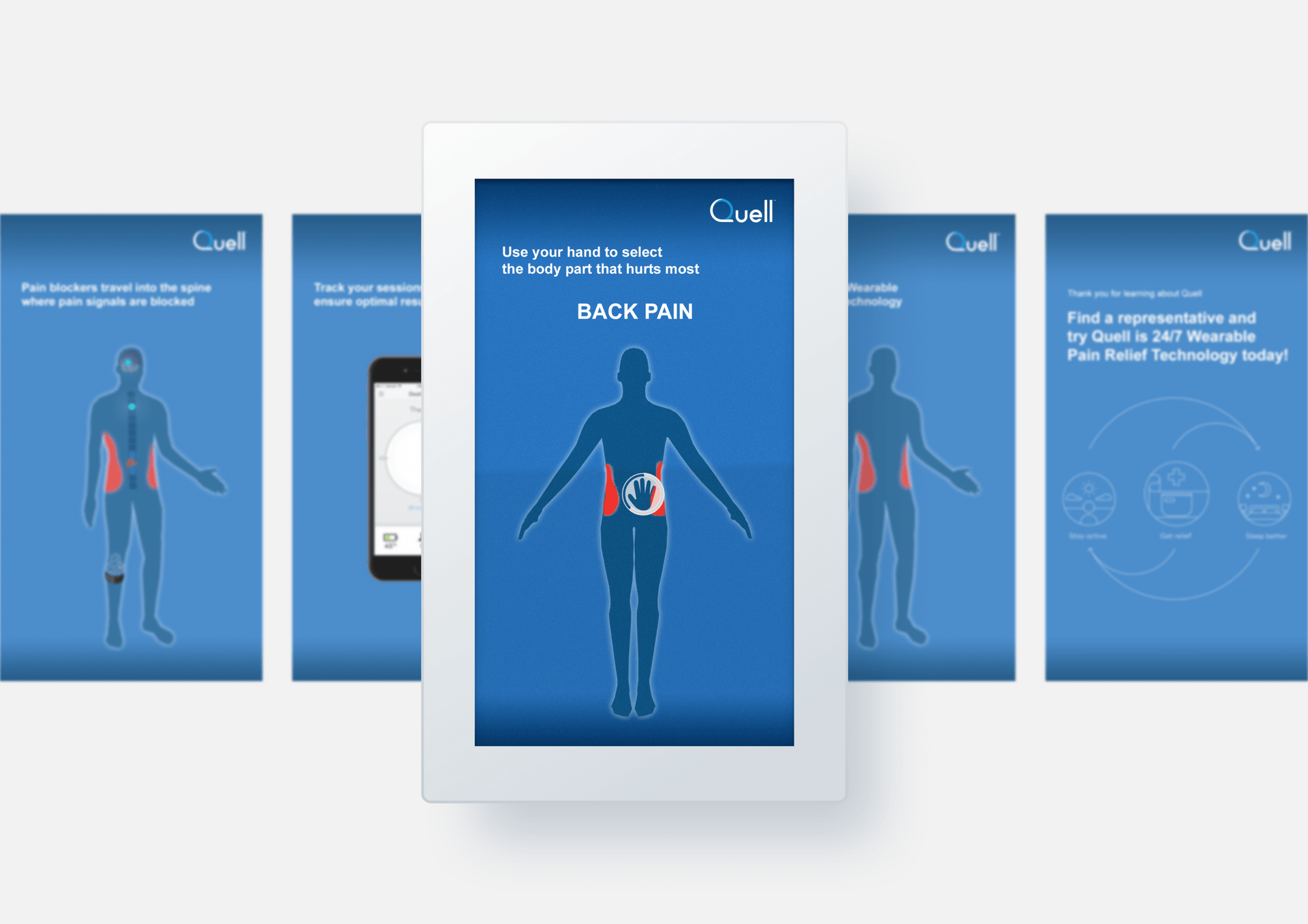

Computer vision can turn your body into a live input for an interactive. We knew that the Microsoft Kinect paired with Unity would produce a stunning display. As attendees walked by the booth, the Kinect recognized them and instantly presented a live avatar on a massive 90″ screen. The avatar mimicked the movements of the attendees, creating an interactive and engaging experience. To demonstrate how Quell works, dynamic animations were used to illustrate how the device’s electrical impulses trigger a chemical reaction in the brain, effectively blocking pain. The combination of the live avatar and the captivating animations successfully grabbed attendees’ attention and piqued their interest in NeuroMetrix’s new product.

(03)The Result

Exceeding Sales Goals and Generating Qualified Leads

Fast Forward’s innovative approach of using Kinect and Unity to showcase Quell’s mechanism of action paid off tremendously. Attendees were not only delighted by the live avatar in front of them, but also impressed by the potential of Quell for providing widespread relief from chronic pain. The engaging demonstration resulted in a significant increase in product sales and a surge in qualified leads. Quell impressively surpassed its goals, attracting more attention and generating greater interest among CES attendees.

(04)Behind the Curtain

Technical Notes

Because of the Microsoft Kinect’s skeletal tracking feature, we were able to get a 3D representation of the user. This skeleton was rigged to a 3D model of the character, allowing it to move in real time. We used hand tracking to let the attendee use their hand like a mouse. In order to simulate neural signals moving through the body, we created a custom particle controller so our signal particles could move through the extremities up to the brain and back down into the spine. By toggling submeshes in the model, we could highlight the pain areas in a different color.